Database CI/CD

Deploy Database Changes and Integrate Development in a Single Process (the Sequel)

Database Task-Based Deployments – Part II

My previous post on this subject outlined why it’s important to be able to deploy database changes on the fly. This time, we take a look at why developers should document the reasons behind changes as part of the check-in metadata when looking to deploy database changes across environments.

First, some background: When practicing continuous delivery, any code check-in triggers a build process followed by deployment to the test environment and running tests. If there is a failure, people are notified that there is a broken build and they can quickly fix it. There are dual goals here: To avoid breaking the build as well as to release the latest check-ins as quickly as possible.

When the process is started from scratch, it’s relatively easy to implement. You can run parallel processes, and if one fails, you can quickly compare the changes between the failed execution and the previous, successful execution to find out what broke the build.

This process is proven effective but is still not being implemented to the same degree in database code (table structure, procedures, lookup content and so on) as it is in native code (such as Java, C#, C++). When I ask people why this is the case, they typically reply that they “don’t want to deploy database changes the same way as code. We want to control which changes in the database will be released and when”.

I can understand their concerns. It’s not enough to save just the object definition in the check-in without explaining or at least mentioning the circumstances of any given change, what the code revision relates to, and what functionality it provides. Information regarding the reason for the change (task, user story, requirement, epic etc.) is as critical as saving the object definition.

So how do we accomplish this?

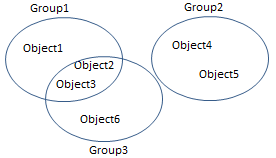

The concept here is to deploy continuous delivery database changes across environments while recording the reason for the change as part of the metadata of the check-in. Using simple mathematical groups, we establish a system whereby we can always add new groups to the set we promote in an organized and scalable manner. Each group represents the changes for a relevant task.

First we generate the build (delta) script of Group 1. Next we generate the build (delta) script of Group 1, Group 2, and so on. Any new group may add new objects or new revision for existing objects.

Practice

Let’s assume the following scenario: Our database contains 3 tables (T1 [c11 int, c12 varchar], T2 [c21 date, c22 int] and T3 [c31 int, c32 date]), 3 procedures (P1, P2 and P3). We’ll name the current structure V1.1.

- Developer A introduces the following changes to T1 (add new column – C13 date) and altered procedure P1 as part of Task1. We’ll name this structure V1.2-Task1

- A build process is triggered and promotes the changes made by developer A to the next environment – the QA

- Developer B introduces the following changes to T2 (add new column – C23 varchar) and altered procedure P1 (which includes the change made by developer A) as part of Task2. We’ll name this structure V1.3-Task1-Task2. As you can see Task2 is dependent of Task1

- A build process is triggered and promotes the changes made by developer B to the next environment – the QA.

- Developer C introduces changes to T3 (add new column C33 varchar), altered procedure P2 and altered procedure P3 as part of Task3. We’ll name this structure V1.4-Task3

- Developer C introduces changes to T1 (change C13 from date to int) and altered procedure P3 as part of Task1. We’ll name this structure V1.5-Task1-Task2

Now only changes related to Task1 and Task3 need to be promoted. Let’s review the current status:

| Object | Object Revision |

| T1 | 3 |

| T2 | 2 |

| T3 | 2 |

| P1 | 3 |

| P2 | 3 |

| P3 | 3 |

So the challenge now is to find which objects and which revision should be considered when generating the delta script for Task1 & Task3.

Let’s review the change history:

| Object | Change | Object Revision | Made for |

| T1 | New column C13 | 2 | Task1 |

| P1 | Altered | 2 | Task1 |

| P3 | Altered | 2 | Task1 |

| T2 | New column C23 | 2 | Task2 |

| P1 | Altered | 3 | Task2 |

| T3 | New column C33 | 2 | Task3 |

| P2 | Altered | 2 | Task3 |

| P3 | Altered | 3 | Task3 |

| T1 | Modify C13 | 3 | Task1 |

| P2 | Altered | 3 | Task1 |

Collecting changes made for Task1 returns the following:

| Object | Object Revision |

| T1 | 3 |

| P1 | 2 |

| P2 | 3 |

| P3 | 2 |

Collecting changes made for Taks3 returns the following:

| Object | Object Revision |

| T3 | 2 |

| P2 | 2 |

| P3 | 3 |

As we can see, if we execute the script of changes for Task1 followed with the script of changes for Task3, or if we first execute the script of changes for Task3 followed by the script of changes for Task1, we will not get the desired structure. This is because there are objects that were affected by different tasks and the final revision of the objects is not in the same task.

| Object | Expected Object Revision | Executing changes Task1 followed by Task3 | Executing changes Task3 followed by Task1 |

| T1 | 3 | 3 | 3 |

| T3 | 2 | 2 | 2 |

| P1 | 2 | 2 | 2 |

| P2 | 3 | 2 | 3 |

| P3 | 3 | 3 | 2 |

By using the group unions we can collect changes for Task1 and then collect changes for Task1 & Task3 together. And if Task7 was also approved, then we can collect changes for Task1, Task1 & Task3, and Task1 & Task3 & Task7.

Based on the concept of group unions and the fact that the latest revision of an object returns in the union, we can promote changes for all tasks by promoting changes for one task – followed by promoting changes for the first task union the second task and so on.

Doing so requires that the check-in metadata includes the reason (task, user-story, epic, requirement etc.) that is being used when generating the database build script and deploying it.

Database deployment process. How to integrate development and deployment?