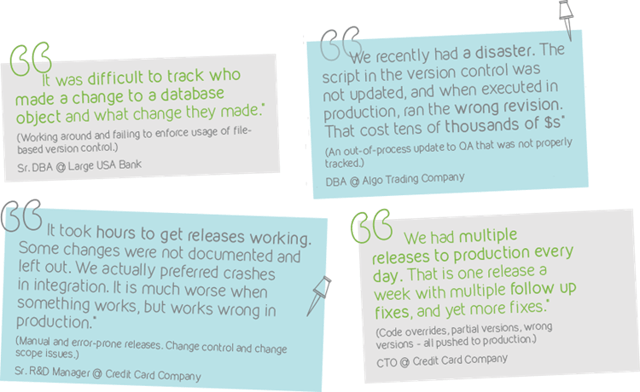

For many, automating the database may seem like a daunting task. The risk involved in doing so could lead to database downtime, or worse, a data breach. If done properly however, implementing continuous delivery for the database can be beneficial to your organization’s efficiency. Before making the jump, it’s important to understand the challenges of database continuous delivery to be best prepared for the integration.

A Bit Of Background

Continuous delivery and continuous integration are mainly about automation and testing, which requires a trustworthy database source control. In addition, it helps to automate the process of software delivery and infrastructure changes.

An ever increasing number of organizations are implementing continuous processes fueled by reports of the benefits, including quicker time to market, reduced costs and higher quality products.

In many cases, lost in this transition is the database. While it’s important to take into account the challenges of database continuous delivery, pitfalls can easily be avoided if the following best practices are implemented:

Database Build

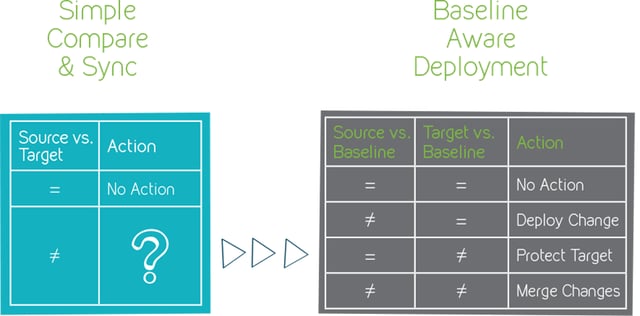

In order to generate the correct database change scripts, the build phase must have information on the current structure and the source control. But only having this information (the case with standard db schema compare and sync tools) is not enough.

Simply comparing two environments won’t provide the necessary insight regarding the nature of the differences. For example:

- A case where the difference conflicts with an emergency fix.

- The trunk/stash/QA environment was already updated with other changes from a different branch.

- The later environment (trunk/stash/QA) is more up-to-date regarding specific objects – thus the difference should not be part of the delta changes script.

Baseline Aware Analysis

This missing information is only available with baseline aware analysis. The input for the database build phase should absolutely be taken from the source control repository, which only includes changes that were checked-in and not changes that are still in work-in-progress mode.

This brings us to the starting point of the process – the source control – and how to make sure the build process retrieves the relevant changes.

Reliable Database Source Control Is a Must

In this phase, developers introduce changes to the database structure and reference look-up content or logic (procedures, function, etcetera ) in the database in two ways:

- Using a shared database environment for the team

- Using a private database environment for each developer

Both methods have advantages and disadvantages. Using a shared database environment reduces database code merges and saves time and money by updating the database structure based on the source control.

Using a private database environment causes many merges of the database code, but mitigates the risk of a colleague unwittingly overriding your code (or vice versa). In addition, a private database environment may increase management overhead, licenses, hardware, and other associated costs.

The primary reason why the private environment method is not commonly used relates to how developers publish changes from their private work space environment to the integration environment. Publishing changes should not revert changes made by someone else, and updating the private environment from the source control repository should not revert works-in-progress.

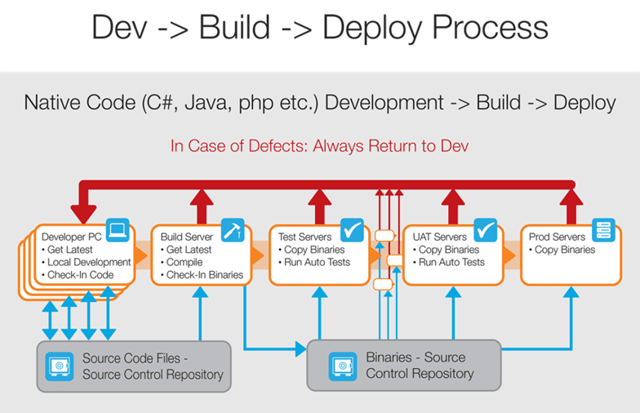

The same process of building the native code using only changes which are documented in the source control repository should be applied to database code changes. Developers work on the native code in the integrated development environment (IDE) and then check-in the changes to the source control repository without any additional manual steps.

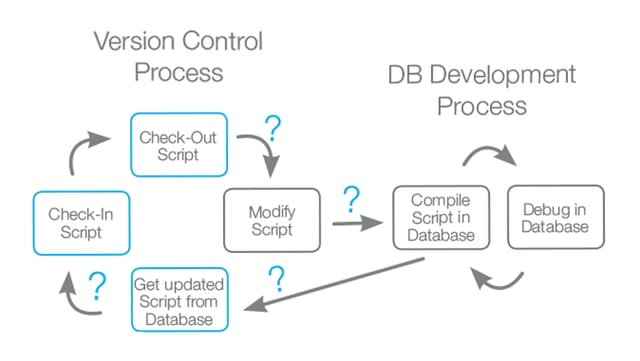

Having a file-based script that a developer is maintaining for his/her changes will create a few challenges that will be difficult to resolve and will require a lot of time.

Version Control Repository and the Database Structure

If ‘Developer A’ makes a number of changes to a script and ‘Developer B’ makes other changes to the script, neither of the developers can execute their entire scripts because the scripts override (or revert) each other.

Anyone with sufficient database credentials may log in to the database, introduce a change, and forget to apply the change in the relevant script of the file-based version control. In addition, there are other challenges that occur in the deployment phase but originate in previous phases:

- Controlling the order of the execution of scripts created by several developers.

- Maintaining the change scripts on a release scope change.

Instead of running many small scripts (in the same order that they’ve been executed in QA), which may change the same object several times, execute fewer scripts and change the object only once.

Database Deployment Logic

Another continuous delivery pressure point is revealed in database deployment:

- Can the database deployment process act as the native code?

- Can it replace the existing database/table in production with the new database/table from the development?

- Does it have to alter the existing database structure in production from the current state to the target state to preserve the data?

Deployment of native code artifacts – binaries of Java, C#, C++ – can be achieved by copying the new binaries and overriding the existing ones (current state has no effect on the binary content). The deployment of database code changes is done by changing the structure of the database or schema from the given state (current state) to the target state (end point).

When executing a script in the database, the given state (current point) must be the same as it was when the script was generated, otherwise the outcome is not predictable.

The Challenges Of Database Continuous Delivery Are Worth It

The benefits of continuous delivery are obvious. Increased productivity, faster time to market, reduced risk and increased quality, to name few. But most organizations are struggling to successfully extend and incorporate these practices into the database.

While it may seem a tall order, there are solutions to resolve these issues, and if implemented correctly, the database can flourish within a continuous delivery pipeline.

Do you know what are the best practices for database design? You might want to read our next article to find out.